Image Source: Wikimedia, Author – Esquivalience

Wouldn’t be it great if we had a system that could write billions of lines of code by itself and develop almost defect-free critical applications of a wide range of variety in minimal time without being programmed with task-specific rules! What we had to give as input is only one or two already developed sample codebases. IT Industry could make a huge amount of long-term profit utilizing such a system. There would be hardly any difference between a service-based IT company and a product-based IT company. Such a genre of application development is a technology-fiction at present but we already have similar systems which are reigning over the fields of image recognition, speech recognition, social network filtering, playing board and video games, medical diagnosis, and even in activities that have traditionally been considered as reserved to humans, like painting.

Artificial Self-learning:

Have you ever thought about why children dream more than adults while sleeping? It is probably because their brains remain more biased toward the pragmatic world beyond abstraction. The common nature of the systems mentioned above is that they are like children – they don’t care for advice, they look for examples. The soul of these systems is an algorithm called “Feature Learning” or “Representation Learning” which improves automatically through experience. This algorithm allows a system to automatically discover the representations needed for feature detection or classification from raw data and thus leverages a system to learn the features and use them to perform a specific task. This task was limited to just the identification of a subject previously but walking abreast with human intelligence, the algorithm has improved to such an extent that it can now get systems to imagine new things!

Breeds of Artificial Self-learning:

Artificial self-learning is mainly of two types. One is ‘Supervised Feature Learning’ or learning features from labeled data, for example, a system capable of image recognition can learn to identify images that contain human beings by analyzing example images that have been manually labeled as “human being” or “not human being”.

The second type of artificial self-learning is ‘Unsupervised Feature Learning’. In this case, features learned from an unlabeled dataset are employed in a supervised setting with labeled data to improve performance. As input labels are unavailable in the basic learning process, the system concentrates on the structure captured by low-dimensional features underlying the high-dimensional input data.

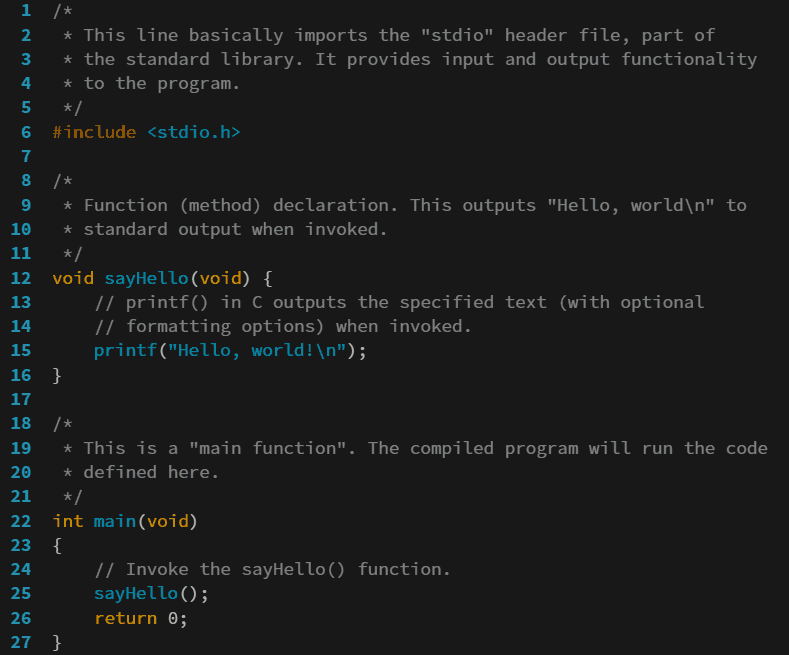

‘Supervised Feature Learning’ is again of two types – ‘Supervised Dictionary Learning’ that exploits both the structure and labels of input data and ‘Artificial Neural Network’.

Deep Neural Network:

The ‘Artificial Neural Network’ algorithm works like a network of distributed connection points consisting of ‘Nodes’ and ‘Connections’ to learn, recognize, and even imagine! This algorithm actually tries to mock an animal or human brain! The ‘Nodes’ behave as ‘Neurons’ and the ‘Connections’ act as ‘Synapses’ of a biological brain. There is a ‘Weight’ associated with every ‘Node’ and ‘Connection’ that adjusts continuously to customize the strength of the flowing signal as learning goes on. It is quite obvious that the role of the ‘Weight’ property of ‘Artificial Neural Network’ is more like the number of ‘Neurotransmitter Receptors’ in the biological brain. The specialty of the ‘Artificial Neural Network’ algorithm is that it can show a change in output even if there is a very slight change in input.

The word “Deep” specifies an ‘Artificial Neural Network’ containing multiple layers. Multiple layers actually enable an ‘Artificial Neural Network’ to progressively extract higher-level features from the raw input to learn with minimal error in minimum time. For example, in image processing, lower layers may learn edges while higher layers may learn the concepts which are more relevant to us such as digits or letters, or faces that we represent by edges only. A ‘Deep Neural Network’ algorithm can be more efficient but not dynamic or plastic at all like the algorithm of the human brain. For instance; a ‘Deep Neural Network’, capable of image processing, cannot handle speech. Though this drawback may be overcome by letting the ‘Weight’ property of the network be responsive not only to the age of learning but also to type of learning, that is a matter of further research.